Elon Musk's Grok AI Sparks Privacy Concerns Over Medical Data Analysis

Palo Alto, Thursday, 28 November 2024.

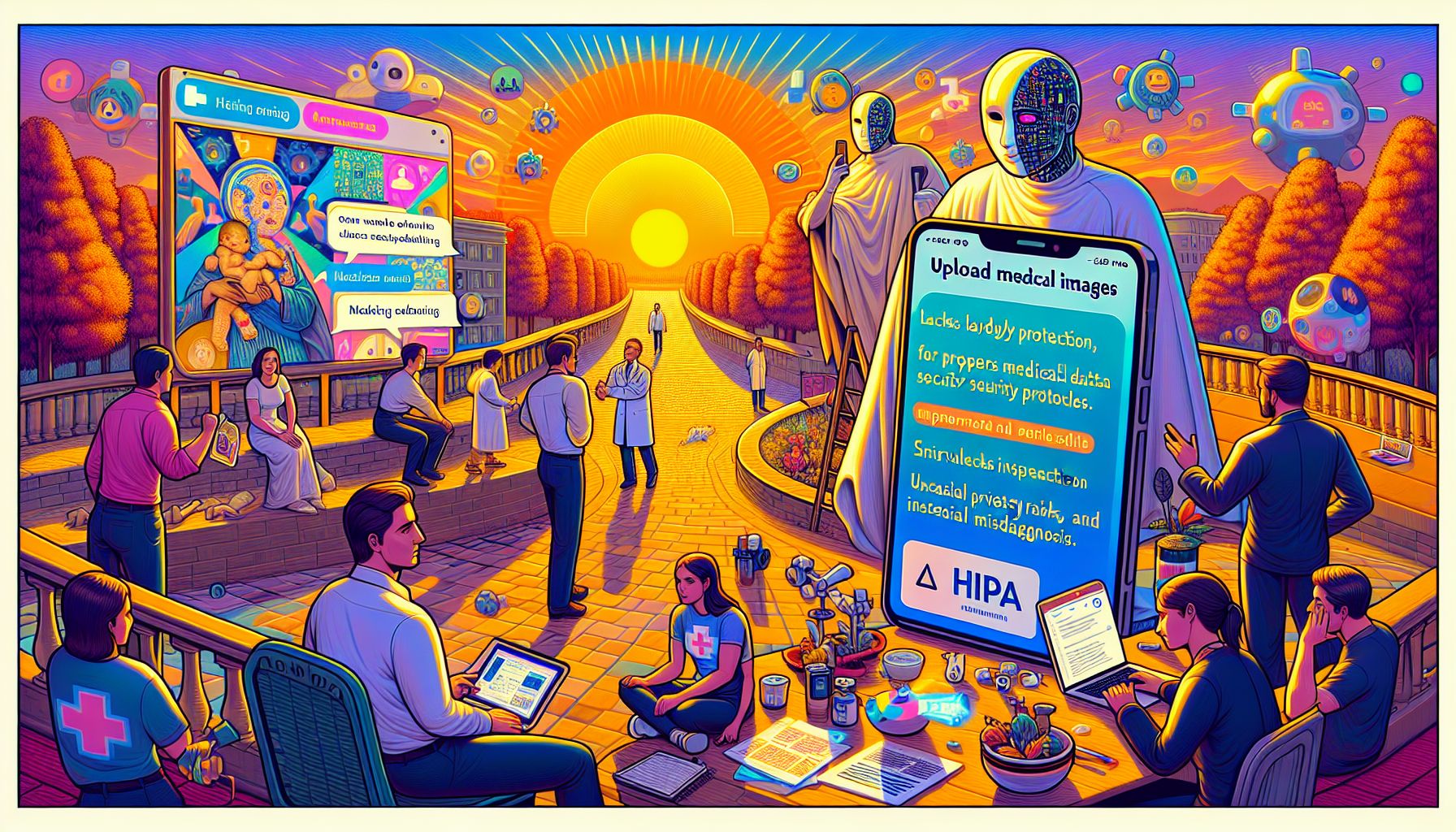

In a controversial move this November 2024, Elon Musk encouraged X users to upload medical images for analysis by his AI chatbot Grok. While some users report successful diagnoses, health experts warn about serious privacy risks and potential misdiagnosis, as the platform lacks HIPAA protection and proper medical data security protocols.

Grok AI: A New Player in AI Technology

Elon Musk’s introduction of Grok AI marks a significant leap in AI chatbot technology. Developed by xAI, Grok is designed to compete with established platforms like ChatGPT and Gemini. Launched in November 2023, Grok is based on a large language model and is available to X Premium users. The chatbot has been marketed for its humorous and somewhat irreverent responses, distinguishing itself from competitors who are often more conservative in their outputs[1].

Innovative Features and Growing Controversies

Grok’s capabilities include image analysis, known as AI Vision, and recent updates have allowed it to interpret medical images, such as X-rays and MRIs. However, this innovation comes with significant controversy. Experts have raised concerns about privacy risks, as the platform encourages users to submit personal medical data without the safeguards typical of healthcare environments. This has sparked anxiety about potential data misuse and inaccuracies in diagnostics, which have been reported by some users[2].

Privacy Concerns and Security Risks

The primary concern with Musk’s approach is the lack of HIPAA compliance, which leaves users’ sensitive medical information vulnerable. Critics argue that Grok’s reliance on user-submitted data instead of secure, de-identified medical databases could lead to privacy breaches. Experts like Bradley Malin from Vanderbilt University emphasize the risks of sharing personal health information on social media platforms, where the data might not be properly protected[3].

Balancing Innovation with Responsibility

As Grok continues to develop, it faces the challenge of balancing cutting-edge innovation with ethical responsibility. While the AI’s ability to analyze medical data holds potential for advancing healthcare technology, the current lack of stringent privacy measures poses a significant hurdle. The AI community and users alike are calling for more robust security protocols to ensure that technological advancements do not come at the expense of user privacy and safety[4].